This post aims to provide a possible picture of how based zk (zero-knowledge) rollups can be designed to operate over Kaspa’s UTXO-based L1 (see @hashdag’s post for broader context). This is by no means a final design, but rather the accumulation of several months of discussions with @hashdag, @reshmem and Ilia@starkware, which is presented here for aligning the core R&D discussion in the Kaspa community around common ground before finalizing (the L1 part of) the design.

I’m making a deliberate effort in this post to use the already established jargon and language of the broader SC community. To this end I suggest that unfamiliar readers review documents such as eth-research-based, eth-docs-zk and nested links therein before proceeding with this post.

Brief description of based zk rollups

A zk rollup is an L2 scaling solution where L2 operators use succinct zk validity proofs to prove correct smart contract execution without requiring L1 validators to run the full computations themselves. A based zk rollup is a type of rollup design where the L2 is committed to operations submitted to L1 as data blobs (payloads in Kaspa’s jargon), and cannot censor or manipulate the order dictated by L1.

Based zk rollups as an L1 ↔ L2 protocol

Since based zk rollups represent an interaction between the base layer (L1) and the rollup layer (L2), the L1 must expose certain functionalities to support rollup operations. Conceptually, this defines a protocol between L1 validators and L2 provers.

Logical functionalities expected from L1:

-

Aggregate rollup transactions: L1 aggregates user-submitted data blobs in the order received, providing a reliable anchoring for L2 validity proofs.

-

Verify proof submissions: L2 operators submit zk proofs that confirm correct processing of these transactions, ensuring L2 execution follows the agreed protocol and updating the L2 state commitments stored on L1.

-

Entry/Exit of L1 funds: The protocol must enable deposits and withdrawals of native L1 funds to and from L2, ensuring consistent state and authorized spending.

Key point: L1 acting as the zk proof verifier (point 2) is crucial for enabling the native currency (KAS) to serve as collateral for L2 financial activity, underscoring the importance of point 3. Additional benefits of having L1 verify the proofs include establishing a clear, single point of verification (enforced by L1 consensus rather than requiring each interested party to perform it individually) and providing proof of state commitment for new L2 validators.

VM-based vs. UTXO-based L1

When the L1 is a fully-fledged smart contract VM, the establishment of this protocol is straightforward: the rollup designer (i) publishes a core contract on L1 with a set of rules for L1 validators to follow when interacting with the rollup; and (ii) specifies a program hash (PROG) that the L2 provers are required to prove the execution of (via a zk proof, ZKP). This two-sided interplay establishes a well-defined commitment of the rollup to its users.

For UTXO/scripting-based L1s like Kaspa, a more embedded approach is required in order to support various rollup designs in the most generic and flexible way.

Technical design of the L1 side of the protocol in UTXO systems

To begin with, I present a simplified design which assumes a single rollup managed by a single state commitment on L1. Following this minimum-viable design I discuss the implications of alleviating these assumptions.

Kaspa DAG preliminaries

A block B \in G has a selected parent \in parents(B) as chosen by GHOSTDAG. The mergeset of B is defined as past(B) \setminus past(\text{selected parent of } B). The block inherits the ordering from its selected parent and appends its mergeset in some consensus-agreed topological order. Block C is considered a chain block from B 's pov if there’s a path of selected parent links from B to C (which means the DAG ordering of B is an extension of C ’s DAG ordering).

Detailed protocol design

Recursive DAG ordering commitments:

A new header field, called ordered_history_merkle_root, will be introduced to commit to the full, ordered transaction history. The purpose of this field is to maintain a recursive commitment to the complete sequence of (rollup) transactions. The leftmost leaf of the underlying Merkle tree will contain the selected parent’s ordered_history_merkle_root, thereby recursively referencing the entire ordered history. The remaining tree leaves correspond to the ordered sequence of mergeset transactions, as induced by the mergeset block order.

ZK-related opcodes:

OpZkVerify: An opcode that accepts public proof arguments and verifies the correctness of the zk proof (our final design might decompose this opcode into more basic cryptographic primitives; however, this is out of scope for this post and will be continued by @reshmem).OpChainBlockHistoryRoot: An opcode that provides access to theordered_history_merkle_rootfield of a previous chain block. This opcode expects a block hash as an argument and fails if the block has been pruned (i.e., its depth has passed a threshold) or is not a chain-block from the perspective of the merging block executing the script. It will be used to supply valid anchor points to which the ZKP can prove execution.

State-commitment UTXO:

The UTXO responsible for managing the rollup state will appear as an ordinary UTXO from L1’s perspective, with no special handling or differentiation at the base layer. The UTXO spk (script_public_key) will be of type p2sh (pay to script hash), which will represent the hash of a more complex structure. Specifically, it will be the hash of the following pre-image:

PROG(the hash of the permanent program L2 is obligated to execute)state_commitment(the L2 state commitment)history_merkle_root(theordered_history_merkle_rootfrom L1’s header, representing the point in the DAG ordering up to which L1 transactions have been processed to produce the corresponding L2 state commitment)- Additional auxiliary data required to verify a ZKP (e.g., a well-known verification key)

- The remaining execution script (will be specified below)

Proof transaction:

In its minimal form, a proof transaction consists of an incoming state-commitment UTXO, an outgoing updated state-commitment UTXO and a signature revealing the pre-images and the ZKP.

Assuming such in, out UTXOs and a signature script sig, the following pseudo code outlines the script execution required to verify the validity of this signature (and logically verify the L2 state transition):

//

// All data is extracted from sig

// Some operations below might use new Kip10 introspection opcodes

//

// Skipping the part where the script itself is proven to be in the preimage

// (which is standard p2sh processing)

//

// Prove preimages

show (prog_in , commit_in , hr_in , ...) is the preimage of in.spk

show (prog_out, commit_out, hr_out, ...) is the preimage of out.spk

verify prog_in == prog_out // verify prog is preserved

// Verify L1 history-root anchoring

extract block_hash from sig // the chain block we are claiming execution to

hr_ref <- OpChainBlockHistoryRoot( block_hash ) // fails if anchoring is invalid

verify hr_ref == hr_out

// Verify the proof

extract zkp from sig

OpZkVerify( proof: zkp, proof_pub_inputs: [commit_in, commit_out, hr_in, hr_out])

// ^ omitting prog and other auxiliary verification data

L2 program semantics:

In order to provide the desired based rollup guarantees, the execution specified by the (publicly known) L2 PROG must strictly adhere to the following rules:

- Reveal (through private program inputs) the full tree diff claimed to be processed:

T(hr_out)\setminusT(hr_in) - Execute the identified transactions in order, without any additions or removals

Observe that the first rule, combined with the OpChainBlockHistoryRoot call within the script, ensures that the state commitment always advances to a valid state:

- The script verifies that

hr_outis a valid chain block history commitment. - The

PROGverifies thathr_outrecursively referenceshr_inand, as a result, must be an extension of it.- This verification by

PROGis enforced by L1 throughOpZkVerify.

- This verification by

Operational flow:

- Tx_1, Tx_2, ..., Tx_n with data payloads are submitted to the DAG, included in blocks, and accepted by chain blocks C_1, C_2, ..., C_n, respectively.

- The transaction hashes are embedded into the

ordered_history_merkle_rootfields of the corresponding headers, enforced as part of L1 consensus validation. - An L2 prover chooses to prove execution up to block C_i

- A proof transaction referencing the initial state-commitment UTXO and producing a new state-commitment UTXO (encoding the new history root

ordered_history_merkle_root(C_i)) is created. - The proof is validated by L1, and the new state-commitment UTXO replaces the previous one in the UTXO set, recording the L2 state transition on L1.

Soundness and flexibility of the proposed design

The design presented supports fully based zk rollups by embedding ordered history Merkle roots into block headers and introducing new zk-related opcodes into Kaspa’s script engine. The history roots provide modular and complete evidence of DAG ordering, enabling provers to select granularity over any period of consecutive chain blocks (while still requiring processing in mergeset bulks). The combination of L1 script opcodes and L2 PROG programmability provides substantial flexibility for rollup design.

Points for subsequent discussion

Despite its focus on the most baseline design, this post is already becoming rather long, so I’ll wrap up by briefly outlining some key “zoom-in” points for further discussion and refinement.

-

Uniqueness of the state-commitment UTXO

1.1 Challenge: Proving that a specific UTXO represents “the” well-known authorized state commitment for a given rollup. Although state transitions do not require such proof, it is important, for instance, for proving L2 state to a newly syncing L2 node.

1.2 Solution Direction: L2 source code can define a “genesis” state (encoded in the initial UTXO), and zk validity proofs can recursively attest that the current state originated from that genesis. -

Entry/Exit of L1 funds

2.1 Allow deposits to static addresses representing the L2.

2.2 Allow withdrawals back to L1 (as additional proof transaction outcomes).

2.3 The N-to-const problem: Address the challenges arising from local limits on transaction size and the potentially many outcomes resulting from a batched proof operation. -

Extension to many rollups ¹

3.1 Requirement/Desire: Each L2 rollup prover should only need to execute O(\text{rollup activity}) within theirPROGproof execution.

3.2 Solution Direction: Manage the L1 history Merkle tree by grouping by rollup and further dividing into activity/inactivity branches. Note: Applying the grouping recursively might result in long-term storage requirements per rollup, which has broader implications. -

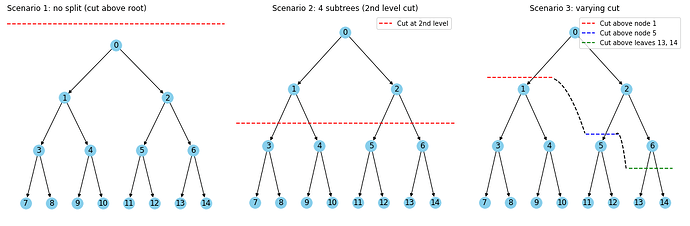

Multiple state commitments per rollup

4.1 Challenge: Allowing L1 to manage multiple state commitments for a single rollup in order to balance scalability and validity (allowing different provers to partially advance independent segments/logic zones of L2 state).

4.2 Solution Direction: Implement partitioned state commitments on L1, representing dynamic cuts of the L2 state tree. If taken to the extreme, this solution could allow a user to solely control their own L2 account via a dedicated state commitment on L1.

Another major aspect not discussed in this post is the zero-knowledge technology stack to be supported and its implications for L1 components (e.g., the hash function used to construct the Merkle history trees). Laving this part to @reshmem, @aspect and others for full follow-up dives.

[¹] The introduction of many rollups (or subnets in Kaspa’s jargon) touches on conceptual topics such as L2 state fragmentation and atomic composability, which are beyond the scope of this post and were preliminarily discussed in @hashdag’s post. Here, I’m referring merely to the technical definitions and consequences.